The Evolving Lens: Creativity, Security, and the New Age of AI

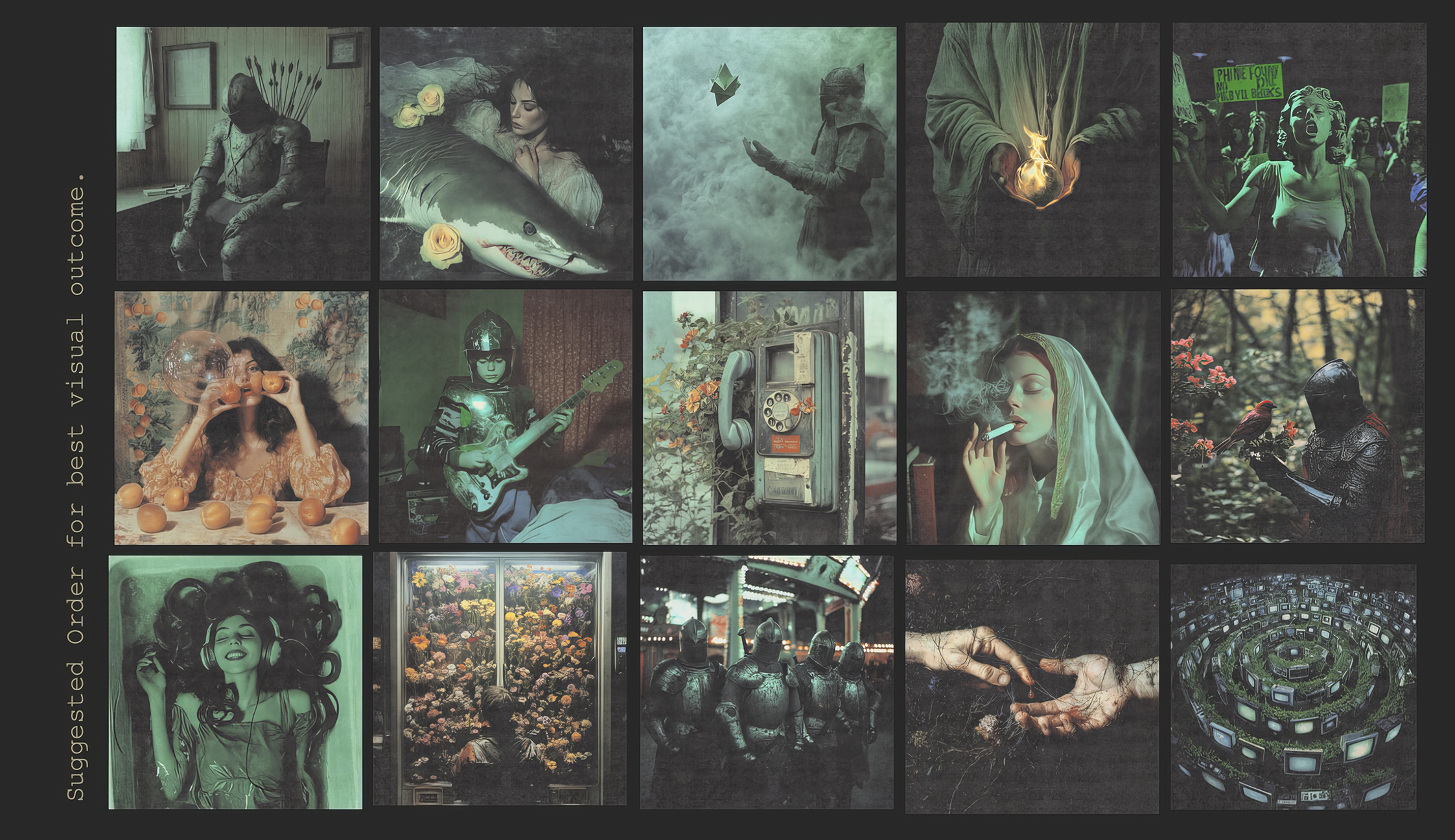

Lately, I’ve been experimenting with merging traditional photography, Photoshop compositing, and AI-driven image generation. What started as an exploration of aesthetics quickly turned into something larger—a meditation on power and responsibility. The results are astonishing, yes. But the deeper question is: What does it mean to create safely in an age where our tools think alongside us?

AI has changed how I approach art. What once required manual layering and compositing can now be expanded, reshaped, or even reimagined through prompts. Yet, that acceleration comes with a price. When you open the door to this kind of collaboration, you’re not just editing images—you’re training a machine to interpret human intention. That’s thrilling, but it also demands caution.

We’ve entered a space where every creative act carries both potential and risk. The same algorithms that help visualize an idea can just as easily replicate a person, fabricate evidence, or erase authenticity. In art, this tension can lead to innovation. In society, it can lead to chaos.

The Historical Arc of Safety and Innovation

Throughout history, every leap forward has required us to redefine what “safe progress” means. Fire allowed warmth but demanded respect. The forge birthed both tools and weapons. The Industrial Revolution gave us prosperity and pollution in equal measure. The Digital Age connected billions and exposed us all.

AI is simply the next chapter in this long story—a continuation of our ancient pursuit of comfort and control. But unlike the hammer or the printing press, AI learns from us. It mirrors our ethics, biases, and intentions. If we feed it carelessly, it reflects carelessness back.

Our civilization has always been built on managing dualities: creation and destruction, risk and reward, freedom and control. AI compresses these opposites into a single act of computation. The challenge now isn’t just technical—it’s moral.

Safety as the New Medium

In photography, light is the medium. In AI, safety might be the new one.

We’re not simply experimenting with pixels anymore; we’re shaping systems that learn how to see. That’s a profound shift in authorship. When I work with AI to generate images, I’m acutely aware that the tool is absorbing patterns—not just from data, but from humanity’s collective digital fingerprint.

That’s why security in this context isn’t just about protecting code. It’s about protecting truth, creative integrity, and the human spirit that drives innovation. Ethical frameworks, transparency, and controlled datasets aren’t bureaucratic burdens—they’re artistic boundaries. They make space for creativity to thrive without corruption.

The Creator’s Responsibility

AI is a mirror that reflects our best and worst instincts. We can use it to build or to manipulate, to enhance beauty or to counterfeit it. Artists and technologists share a duty to shape this reflection with awareness.

We must question not just what AI can create, but why it creates—and who controls the output. In a world of synthetic media and deepfakes, maintaining trust in imagery is as important as mastering the craft itself.

That means implementing digital watermarking, metadata standards, and clear consent frameworks in creative workflows. It also means understanding that the line between enhancement and deception is razor-thin.

The Future of Safe Creation

AI isn’t going away. Nor should it. Like every invention before it, its impact will depend on the principles guiding its use. For artists, the challenge is to stay both curious and vigilant—to embrace the new tools while setting ethical guardrails.

In my own process, that means seeing safety not as a limitation, but as structure—a way to preserve meaning in an era of infinite manipulation. It’s about ensuring that creation remains an act of intention, not accident.

We’ve always built tools to amplify our potential. AI just happens to be the first one capable of amplifying our mistakes too.

The key is balance. Use the fire, but build the hearth.

.svg)